The total amount of data created, captured, copied and consumed is expected to hit 175 trillion gigabytes this year. That’s the equivalent of around 250 billion HD movies or 250 trillion books.

Data supports many business functions – from identifying new opportunities for growth to measuring performance – but as data volumes grow and data complexity increases, the need to effectively and efficiently store all this data becomes a more pressing concern for organisations.

Gartner predict that worldwide IT spending will total $5.74 trillion in 2025, an increase of 9.3% from 2024. Data centre spending specifically is expected to increase by 16% to around $367.2 billion.

In this article, we’ll explore two of the main types of data stores: a data lake and a data warehouse. We’ll dive into the benefits and challenges of each type and explore an innovative new data management architecture known as a data lakehouse, which combines the best elements of both.

What is a data lake?

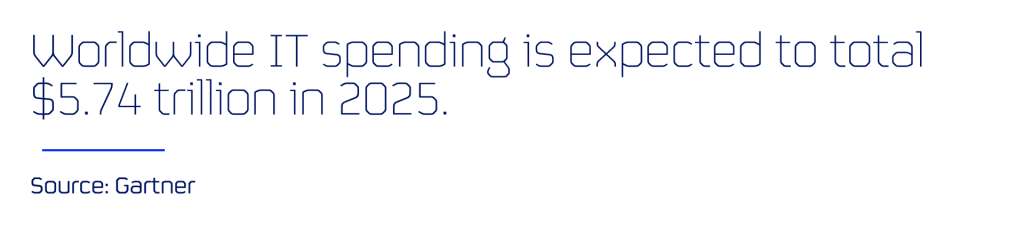

A data lake is a centralised repository that ingests and stores large volumes of data in its original form. It can accommodate all types of data from any source – whether that’s structured, semi-structured or unstructured data.

Structured data includes things like names, addresses and dates. It’s the sort of data that tends to have a standardised format and is easily understood by machine learning algorithms. Unstructured data, meanwhile, isn’t so easily boxed. It can include things like social media posts, images or blogs like this one and is much harder for a machine to process.

Semi-structured data sits somewhere in-between.

Semi-structured data doesn’t adhere completely to rigid boxes, but does still contain some level of organisation, making it easier for computers to process. Examples include emails or website HTML.

Whether structured, unstructured or semi-structured, there is no processing done to any of the data prior to entering a data lake. Instead, the data is put straight into storage, in its original form, and is processed later.

By keeping the data in its raw form, organisations can better maintain the reliability of the data and keep it flexible for a variety of business uses. When the data is eventually needed, it can be pulled from storage and processed for a particular purpose.

Due to the variety and volume of data contained within data lakes, they often require strong data management. Otherwise, they can very quickly become data swamps with low-quality and duplicated data. Further, as data lakes can store vast amounts of sensitive information, firms will have to ensure they are compliant with any relevant regulation and keep security front of mind.

What is a data warehouse?

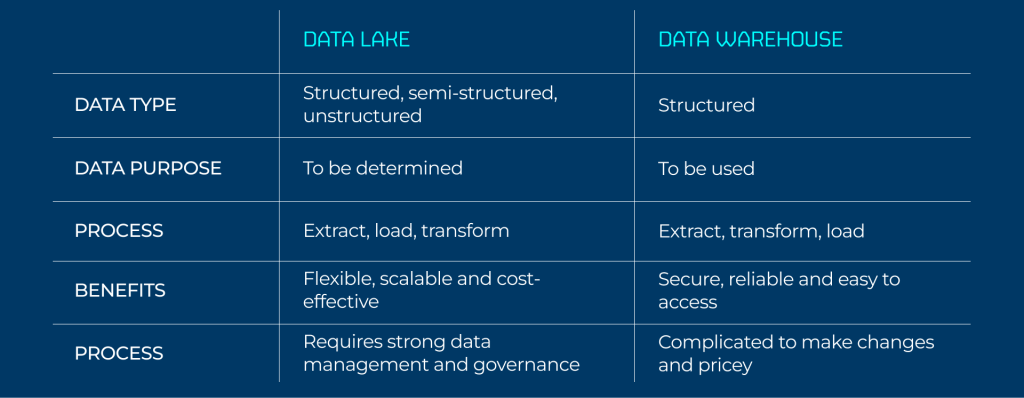

Data lakes and data warehouses are similar; both store and process data, but they do so in different ways.

In a data lake, data of all different forms can enter because it is stored following an extract, load, transform (ELT) process. This means the data is taken from a source (extracted), put into the data lake (loaded) and then converted into a format that can be analysed (transformed).

Because the ELT process transforms data when it reaches its destination, businesses are less limited in the ways they can use the data, allowing for on-demand flexibility and scalability.

By contrast, data warehouses store their data following an extract, transform, load (ETL) process. This means that the data must be converted into a format that can be analysed before it can enter the warehouse.

As the data is cleaned up and standardised before it enters the warehouse, this needs to be done with a specific business purpose in mind, whether that’s analytics or business intelligence. While this can limit the ways an organisation can use their processed data, it can help result in more reliable, accurate and detailed data. This is because the ETL process provides a consolidated, consistent and historical view of an organisation’s data, making it easier to analyse, maintain its quality and meet regulatory standards.

Which is better, a data lake or data warehouse?

Data lakes and data warehouses are two distinct approaches for managing and storing data in organisations. The choice between them depends on your organisation’s specific business needs and the nature of the data you are dealing with.

If, for example, your business was focused on machine learning and experimentation, the unstructured and broad nature of a data lake might be perfect for your organisation. On the other hand, if your business is primarily looking for targeted analysis and reporting, the consistency and accuracy of a data warehouse might be what you’re looking for.

Lakehouse?

There is also a third option for those looking to resolve the limitations seen across data lakes and data warehouses: the data lakehouse.

As the name suggests, a data lakehouse blends elements of both a data lake and a data warehouse on a single platform. They offer the flexibility of data lakes, with the structured querying and strong governance capabilities of data warehouses.

By combining the best of both worlds, data lakehouses can help simplify a business’s data infrastructure, streamline their processes and minimise the chance of developing data silos. All this has made data lakehouses an increasingly popular choice for businesses. But how exactly do they work?

Four key layers of a data lakehouse:

Ingestion layer: this layer extracts data from a wide array of sources, handling structured, semi-structured, and unstructured data.

Storage layer: this layer stores the ingested data, usually using an open file format.

Metadata layer: this layer organises the metadata associated with the stored data, enabling ACID transactions, indexing and time travel which all help to enhance data integrity and quality.

Consumption layer: this layer is where users can use the data contained within the lakehouse to carry out different data-driven tasks within an organisation, like machine learning jobs or data visualisation.